wangwei

机器学习, 数据采集, 数据分析, web网站,IS-RPA,APP

python基础 数据分析 Pandas • 0 回帖 • 1.1K 浏览 • 2019-07-29 14:20:52

机器学习, 数据采集, 数据分析, web网站,IS-RPA,APP

python基础 数据分析 Pandas • 0 回帖 • 1.1K 浏览 • 2019-07-29 14:20:52

pandas 分块读取大规模数据

__author__ = '未昔/angelfate'

__date__ = '2019/7/2 1:30'

# -*- coding: utf-8 -*-

path = r'E:\python\Study\BiGData\new_data.csv'

@timeit

def test_1():

print('test_1')

df = pd.read_csv(path, engine='python', encoding='gbk')

@timeit

def test_2():

print('test_2')

df = pd.read_csv(path, engine='python', encoding='gbk', iterator=True) # 分块,每一块是一个chunk,之后将chunk进行拼接;

loop = True

chunkSize = 10000

chunks = []

while loop:

try:

chunk = df.get_chunk(chunkSize)

chunks.append(chunk)

except StopIteration:

loop = False

print("Iteration is stopped.")

df = pd.concat(chunks, ignore_index=True)

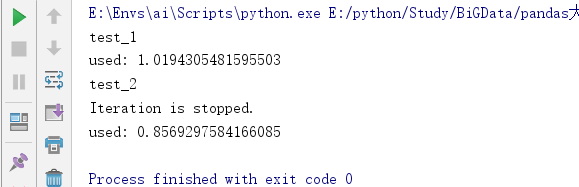

test_1()

test_2()

这个是分别读取 1w 条数据的一个 csv 文件,所用的时间对比: