wangwei

机器学习, 数据采集, 数据分析, web网站,IS-RPA,APP

Hadoop生态圈 RPA 其他经验 案例分享 • 0 回帖 • 1.4K 浏览 • 2019-12-05 10:00:02

机器学习, 数据采集, 数据分析, web网站,IS-RPA,APP

Hadoop生态圈 RPA 其他经验 案例分享 • 0 回帖 • 1.4K 浏览 • 2019-12-05 10:00:02

经验 | Hadoop 生态圈 python+mapreduce+wordcount

经验 | Hadoop 生态圈 python+mapreduce+wordcount

启动 hadoop 进程

上传文件

hdfs dfs -put /home/hadoop/hadoop/input /user/hadoop/input

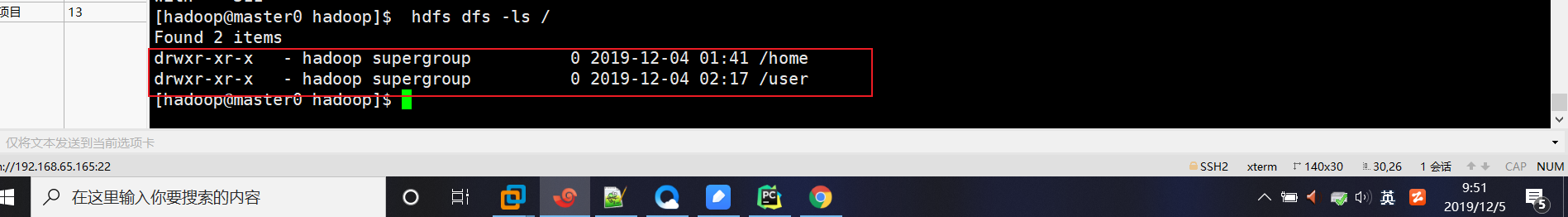

查看 hdfs 现在有哪些文件

[hadoop@master0 hadoop]$ hdfs dfs -ls /

Found 1 items

drwxr-xr-x - hadoop supergroup 0 2019-12-04 02:17 /user

查看上传的文件是否正确

运行程序,查询字符串出现次数

bin/hadoop

jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar

wordcount /user/hadoop/input/ /user/hadoop/output

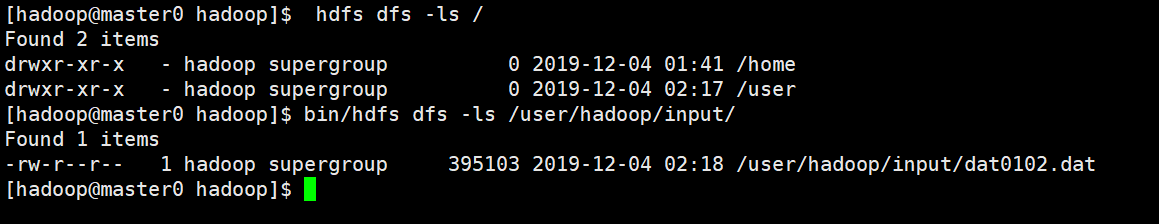

查看输出结果

[hadoop@master0 hdfs]$ hdfs dfs -cat /user/hadoop/output/*

work, 63

worker 315

would 62

write-operations. 62

written 62

........

.......

.......

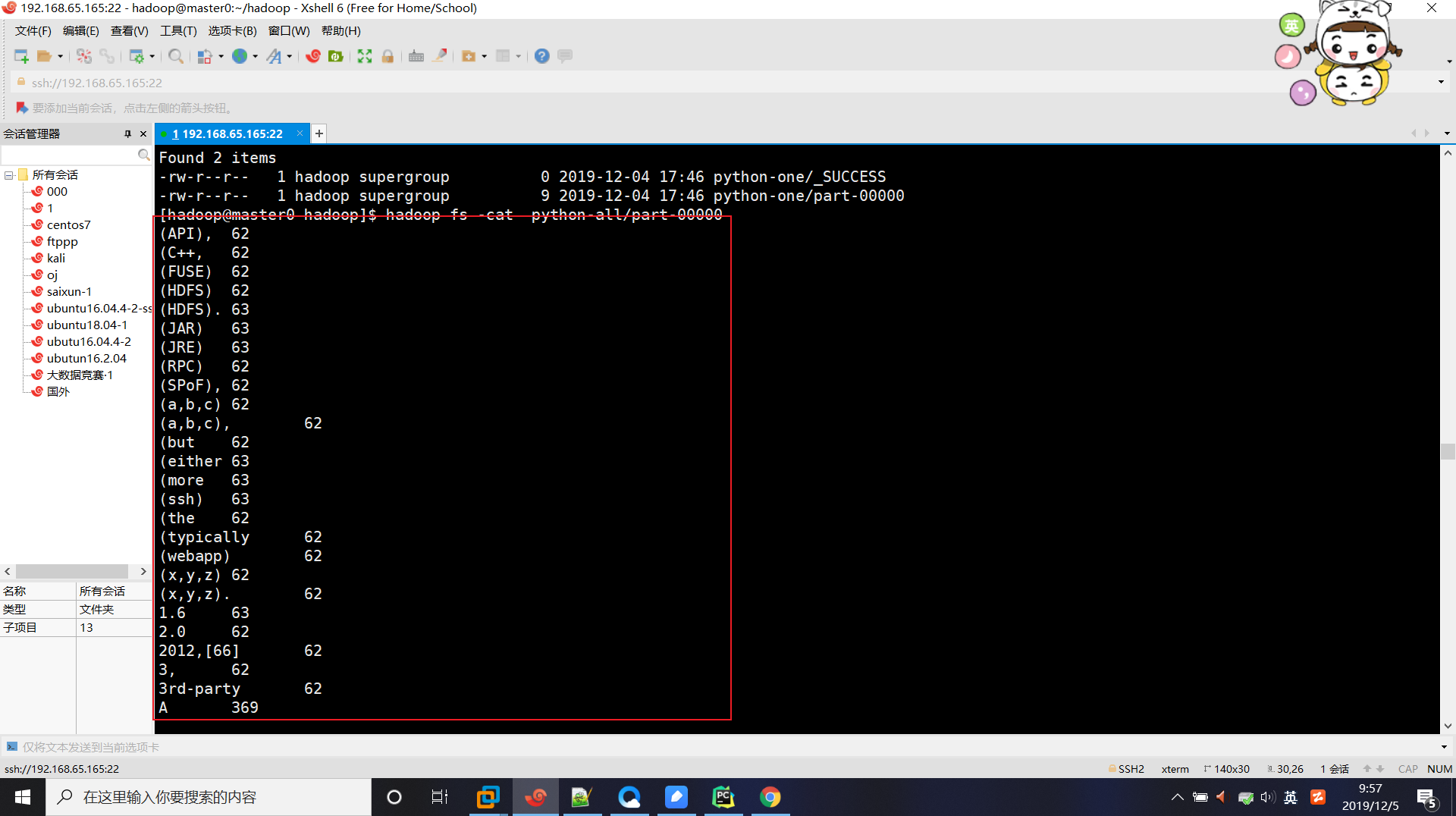

编写 mapreduce 编程,推送到 streaming 中进行运算

编写 mapreduce 函数

#!/usr/bin/env Python3

# -*- coding: utf-8 -*-

# @Software: PyCharm

# @virtualenv:workon

# @contact: 1040691703@qq.com

# @Desc:Code descripton

__author__ = '未昔/AngelFate'

__date__ = '2019/12/4 20:20'

import sys

for line in sys.stdin:

line = line.strip()

words = line.split()

for word in words:

print("%s\t%s"%(word,1))

#!/usr/bin/env Python3

# -*- coding: utf-8 -*-

# @Software: PyCharm

# @virtualenv:workon

# @contact: 1040691703@qq.com

# @Desc:Code descripton

__author__ = '未昔/AngelFate'

__date__ = '2019/12/4 20:25'

import sys

current_word = None #记录前一个单词, 用于比较

count = 0

word = None

current_count = 0 #每个单词最终的数量

for line in sys.stdin: #切分成行

line = line.strip()

word, count = line.split('\t', 1) #key为第一个\t前的值, 只截断一次

try:

count = int(count)

except ValueError: # count如果不是数字的话,直接忽略掉

continue

if current_word == word: #上一个是否和当前的相同

current_count += count

else:

if current_word:#不相同且不是第一个就输出

print("%s\t%s" % (current_word, current_count))

current_count = count

current_word = word

if word == current_word: # 不要忘记最后的输出

print("%s\t%s"%(word,count))

[hadoop@master0 hadoop]$ bin/hadoop jar\

share/hadoop/tools/lib/****.jar \

-file mapper.py -mapper "python mapper.py" \

-file reducer.py -reducer "python reducer.py" \

-input /user/hadoop/input -output /user/hadoop/input

[hadoop@master0 hadoop]$ hadoop fs -cat input/part-00000

(API), 62

(C++, 62

(FUSE) 62

(HDFS) 62

(HDFS). 63

(JAR) 63

(JRE) 63

(RPC) 62

(SPoF), 62

(a,b,c) 62

(a,b,c), 62

(but 62

(either 63

(more 63

(ssh) 63

(the 62

(typically 62

(webapp) 62

(x,y,z) 62

(x,y,z). 62

1.6 63

2.0 62

2012,[66] 62

3, 62

3rd-party 62

A 369

API 124

ARchive 63

An 62

Append. 62

B 124

Because 62

C#, 62

Clients 62

Cocoa, 62

Common 126

Data 62

DataNode 126

DataNode. 63

Distributed 63

Each 62

Environment 63

Erlang, 62

Failure 62

Federation, 62

File 182

Filesystem 62

For 119

HDFS 732

HDFS, 62

HDFS-UI 62

HDFS. 62

HTTP, 62

Hadoop 986

Hadoop-compatible 63

Hadoop. 63

Haskell, 62

I/O 62

In 121

It 62

Java 312

Java, 62

Job 63

JobTracker 63

Linux 62

MapReduce 126

MapReduce/MR1 63

May 62

Moreover, 62

NameNode 126

NameNode) 62

NameNode, 189

OCaml), 62

OS 63

PHP, 62

POSIX 124

POSIX-compliant 62

POSIX-compliant, 62

Perl, 62

Point 62

Python, 62

RAID 124

Ruby, 62

Runtime 63

Secure 63

Shell 63

Similarly, 63

Single 62

Smalltalk, 62

Some 62

System 63

TCP/IP 62

Task 63

TaskTracker, 63

The 552

These 125

This 187

Thrift 62

Tracker, 126

Unix 62

Userspace 62

Web 62

When 125

With 62

YARN/MR2)[58] 63

a 1994

abstractions, 63

access 62

achieved 62

achieves 62

across 250

actions, 62

acts 63

added 62

addition, 62

advantage 124

aims 62

allowing 62

also 62

alternate 63

although 62

always 62

amount 62

an 187

and 1747

and, 63

announced 62

application 124

application. 62

applications 63

applications. 63

approach 63

architecture 63

are 437

around, 62

as 249

automatic 62

available 62

available. 125

awareness 124

awareness: 63

backbone 63

backup 62

backup. 62

be 497

because 62

become 62

been 62

between 187

block 62

blocks 62

both 63

bottleneck 124

browsed 62

builds 62

but 62

by 249

call 62

can 561

capabilities, 62

certain 62

checkpointed 62

choosing 62

client 124

cluster 376

cluster, 63

code 63

command-line 62

commands 62

communicate 62

communication. 62

compliance 62

compute-only 63

concurrent 62

configurations 62

connects 62

consider 62

consists 126

contains 187

copies 62

corruption 63

create 62

criticality. 62

data 934

data, 62

data-intensive 62

data-only 63

data. 63

datanode 62

datanodes, 62

dedicated 63

default 62

demonstrated 62

designed 62

developing 62

differ 62

different 62

directly 62

directories. 62

directory 124

distributed 124

distributed, 62

does 124

due 124

each 124

edit 62

effective 63

engine 63

entire 62

equivalents. 63

especially 62

every 63

example: 62

execute 63

extent 62

fact, 62

fail 62

fail-over. 62

failed 62

failing 63

failure; 63

failures 63

file 810

file-system 249

file-system-specific 63

files 125

files, 62

files. 62

files[65] 62

for 869

framework. 62

from 62

fully 124

generate 125

gigabytes 62

goals 62

goes 124

hardware 63

has 186

have 125

having 124

hence 62

high-availability 62

high. 62

higher. 63

host 63

hosts 62

hosts, 62

huge 124

if 125

images 62

immutable 62

impact 125

in 560

inability 62

includes 125

incorrectly 62

increase 62

increased 62

index, 63

information 63

information, 62

instead 62

interface 62

interface, 62

interpret 62

is 622

is, 63

is. 63

issue, 62

issues 62

it 187

its 124

job 249

job-completion 62

jobs 62

jobs. 62

journal 62

keep 62

lack 62

language 62

large 124

larger 63

letting 62

level 63

libraries.A 6

libraries.File 6

libraries.For 6

libraries.HDFS 15

libraries.Hadoop 16

libraries.In 4

libraries.The 9

local 62

location 63

location. 62

log 62

loss 63

machines. 62

main 62

manage 63

managed 63

management 62

manually 62

map 186

master 126

may 62

memory 63

metadata 124

metadata, 62

method 63

methods 62

might 62

misleading 62

mostly 62

mounted 62

mounted,[62] 62

move 62

multi-node 63

multiple 312

name 125

namely, 62

namenode 496

namenode's 125

namenode, 62

namenodes. 62

namespaces 62

native 62

necessary 63

needed 63

network 249

new 62

node 500

nodes 251

nodes. 189

nodes: 62

nominally 62

non-POSIX 62

nonstandard 63

normally 63

not 310

number 124

occurs, 63

of 1622

offline. 62

on 622

one 125

only 63

operations 62

options 62

or 562

other 248

other. 62

outage 63

over 248

package 63

package, 63

perform 124

performance 124

plus 62

point 62

portable 62

possible 63

power 63

precisely, 63

preventing 63

prevents 62

primary 248

problem 62

problem, 62

procedure 62

programming 62

project 62

protocol 62

provide 125

provides 63

rack 126

rack, 62

rack. 62

rack/switch 63

racks. 63

range 62

rebalance 62

reduce 249

reduces 125

redundancy 125

regularly 62

release 62

reliability 62

remain 63

remote 124

replaced 63

replay 62

replicating 125

replication 124

request. 62

require 125

requirements 62

requires 63

requiring 62

restart 62

running 62

same 125

saves 62

scalability 62

scalable, 62

scheduled 62

schedules 124

scheduling 126

scripts 126

secondary 250

separate 62

served 62

server 188

serves 62

set 63

shell 62

should 63

shutdown 63