机器学习训练手写数字识别(一)

以下场景为,通过机器学习的线性模型和卷积模型,训练数据,最后在浏览器中进行结果测试。

以下为代码,感兴趣的同学可直接配置好环境复用代码.

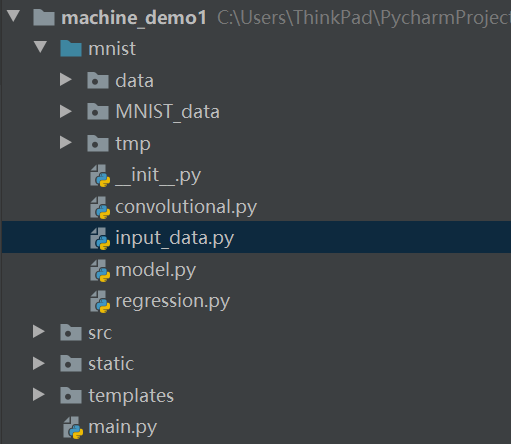

图片为项目结构:

卷积模型

import os

import model

import tensorflow as tf

import input_data

data = input_data.read_data_sets('MNIST_data', one_hot=True)

# 定义模型

with tf.variable_scope('convolutional'):

x = tf.placeholder(tf.float32, [None, 784], name='x')

keep_prob = tf.placeholder(tf.float32)

y, variables = model.convolutional(x, keep_prob)

# 训练

y_ = tf.placeholder('float', [None, 10])

cross_entropy = -tf.reduce_sum(y_ * tf.log(y))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

correct_prediction = tf.equal(tf.arg_max(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

saver = tf.train.Saver(variables)

with tf.Session() as sess:

merged_summary_op = tf.summary.merge_all()

summary_write = tf.summary.FileWriter('tmp/mnist_log/1', sess.graph)

summary_write.add_graph(sess.graph)

sess.run(tf.global_variables_initializer())

for i in range(20000):

batch = data.train.next_batch(50)

if i % 100 == 0:

train_accuracy = accuracy.eval(feed_dict={x: batch[0], y_: batch[1], keep_prob: 1.0})

print("Step %d, training accuracy %g" % (i, train_accuracy))

sess.run(train_step, feed_dict={x: batch[0], y_: batch[1], keep_prob: 0.5})

print(sess.run(accuracy, feed_dict={x: data.test.images, y_: data.test.labels, keep_prob: 1.0}))

path = saver.save(

sess,

os.path.join(os.path.dirname(__file__), 'data', 'convolutional.ckpt'),

write_meta_graph=False,

write_state=False

)

print("Saved:", path)

线性回归模型:

import os

import input_data

import tensorflow as tf

import model

# 下载数据

data = input_data.read_data_sets('MNIST_data/', one_hot=True)

# 建立回归模型

with tf.variable_scope("regression"):

x = tf.placeholder(tf.float32, [None, 784])

y, variables = model.regression(x)

# 训练

y_ = tf.placeholder('float', [None, 10])

cross_entropy = -tf.reduce_sum(y_ * tf.log(y))

train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

# 保存训练好的参数

saver = tf.train.Saver(variables)

# 开始训练

with tf.Session() as sess:

# 初始化所有的变量

sess.run(tf.global_variables_initializer())

# 定义训练1000次

for _ in range(1000):

batch_xs, batch_ys = data.train.next_batch(100)

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

print((sess.run(accuracy, feed_dict={x: data.test.images, y_: data.test.labels})))

path = saver.save(

sess,

os.path.join(os.path.dirname(__file__), 'data', 'regression.ckpt'),

write_meta_graph=False,

write_state=False

)

print("Saved:", path)

这个必须点赞👍